A Basic Introduction to Tokenizers

Intro

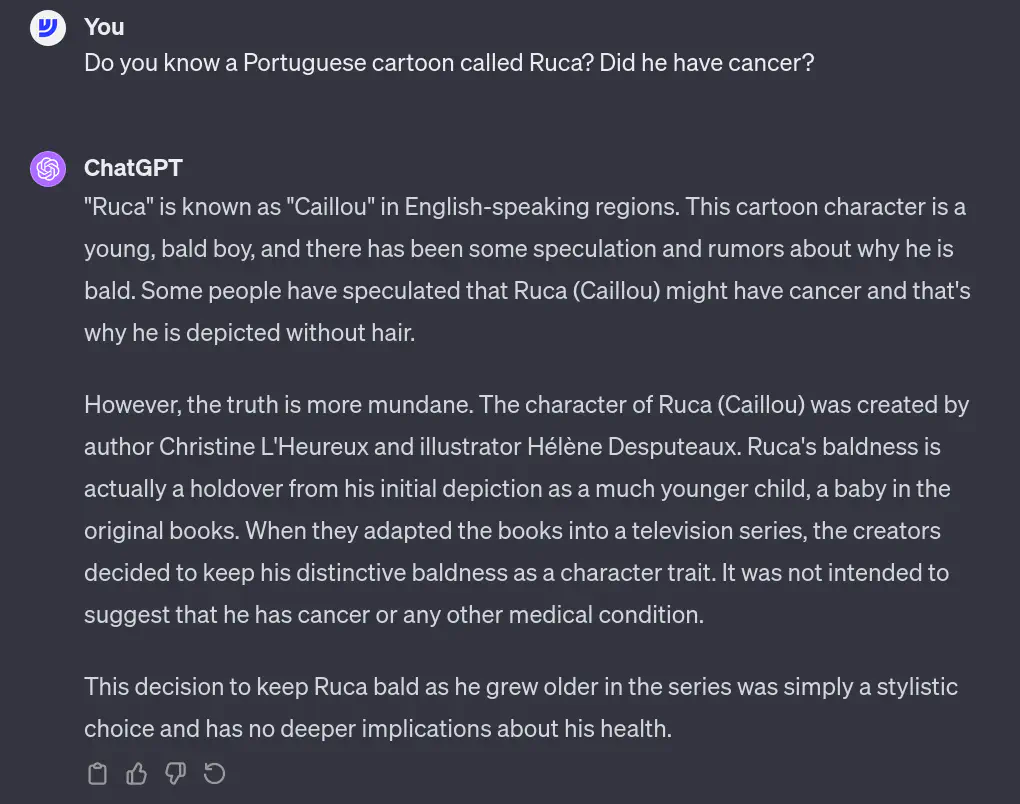

Computers cannot read nor understand text like us humans, they can only read 0’s and 1’s. If you haven’t been living under a rock you might have noticed that NLP (Natural Language Processing), which is a field of AI (Artificial Intelligence), has been getting a lot of mainstream media attention due to the incredible things that it has been achieving through the usage of LLM’s (Large Language Models). We can ask ChatGPT - which uses OpenAI’s own LLM called GPT - to generate text based on any other input text you feed it. As we can see in the image below the results are quite impressive (I didn’t know that Ruca was called Caillou in other countries. The more you know):

All these years I thought he had cancer

We can ask DALL-E - which also uses OpenAI’s GPT - to generate amazingly accurate and realistic images based on a text input:

Notice how he is not smiling… The tuition is really affecting him

You get the point now, this LLM’s stuff is pretty impressive. How do they work though? Well, its quite complex and there are a lot of moving steps involved. Basically our input text goes through a pipeline in order to convert that same text into numbers which computers can understand and Tokenization is right in the beginning of that pipeline.

What is it? And why tokenize in the first place?

Tokenization is the process of splitting text in smaller bits that we call tokens. This process greatly affects the performance of our models so it must be conducted with great care. If you were teaching a child how to read a book, would you straight up make him read Shakespeare? Probably not. What You would probably do is to first teach him how the alphabet works, how to form words, how do they relate to each other and so far so forth, essentially, we would start by building their vocabulary. With the computer models its the same thing, we need to separate the text in “digestible” parts in order to build their vocabulary, that’s why we tokenize.

How do we tokenize?

There are different methods to tokenize text and they can be categorized in essentially 3 groups: word based tokenizers, character based tokenizers and subword tokenizers. Most models nowadays use subword tokenizers as they tend to present better results.

Word Based Tokenizers

Word Based Tokenizers split text using a specified separator and considers each piece of text that results from that, as being tokens. For instance, take a look a this sentence:

“I don’t love colddogs.”

Note: My auto corrector is currently telling me that “colddogs” is not a real word but hotdogs is… What a complex world we live in. For the sake of justice, we will pretend that “colddogs” is a real word.

If we were to tokenize that sentence using a word based tokenization with one space as its separator we would end up with the following tokens:

“I”, “don’t”, “love”, “colddogs.”

We can already see some problems with this tokenization. Take a look at the tokens “don’t” and “colddogs.”. What if we were instead tokenizing this text:

“colddogs? I don’t love colddogs.”

Now after tokenization we would have:

“colddogs?", “I”, “don’t”, “love”, “colddogs."

We have two different tokens (“colddogs.” and “colddogs?”) that are trying to encapsulate the same meaning, in this case, “colddogs”. As the text gets bigger this can lead us to a unnecessary increase in the usage of our computational resources. To combat this we could try separating the punctuation as well, but by doing that we would encounter another problem. If we were to use punctuation to separate the word “don’t” for example we would end up we three tokens, “don”, " ’ " and “t” but this wouldn’t be very effective because those tokens don’t really mean anything individually. A better split would be something like: “do” and “n’t” because now we have tokens that have meaning which is better because in the future if our models encounter a word like “doesn’t”, even if it never encountered it before, it would still be able to infer some of its meaning because of the “n’t” part that it already knows about. The same might be said about the word colddogs (a better split would be cold + dogs). Besides the ones already mentioned, the main problem of this approach its the huge vocabulary that it creates; Its computationally very expensive (there is more than 200 000 words in English) and to deal with this we could limit our vocabulary to a certain amount of tokens but we might lose important information by doing this. Trying to combat problems posed by this approach is solving symptoms but not the cause.

Character Based Tokenizers

A good way to solve the problems posed by the word based tokenizers would be to instead of separating it by spaces, separate it by the individual characters, in other words, Character Based Tokenization:

“I”, " “, “l”, “o”, “v”, “e”, " “, “c”, “o”, “l”, “d”, “d”, “o”, “g”, “s”, “.”

And just like that we have solve the vocabulary size problem because now our vocabulary is comprised of 26 letters (plus other symbols) instead of 200 000+ words. Quite an improvement hun :)? Although we were able to decrease the vocabulary size, we also increased the amount of tokens per block of text. Just look at our example, after tokenizing it in the Word Based Tokenizers section, we ended up with just 4 tokens whereas now we have 16 tokens this means that even though we decreased the size of the model’s vocabulary, we increased the amount of lookups performed by the model, so in the end its a tradeoff (one of data science’s favorite words). Most popular NLP tasks we see encounter today usually revolve around models that try to understand context at a word and phrase level which can be hard to do if we are using Character Based Tokenization because in languages like English or Portuguese, individual characters don’t have any meaning (unlike other languages like Chinese). A great advantage of this approach is that it allows for very few Out Of Vocabulary words (words that are not present in our vocabulary).

Subword Tokenization

The subword tokenization sits right in between word based tokenization and character based tokenization. Its seeks to get the best of both worlds, in other words, smaller vocabulary size than a word base tokenization method but higher semantic meaning than a character based tokenization method. Take a look at the following sentence:

“I like tokenization”

Depending on the subword based tokenization algorithm you use, your output tokens can look something like this:

“I”, “like”, “token”, “ization”

Notice how we kept the character “I” and word “like” as individual tokens but divided the word “tokenization” into 2 different tokens (subwords), namely “token” and “ization”. This subword splitting will aid the models to learn that the words with the same root as “token” like “tokens” and “tokenizing” are similar in meaning. It will also help the model learn that “tokenization” and “modernization” are made up of different root words but have the same suffix “ization” and are used in the same syntactic situations. All of the the subword tokenization algorithms adhere to the following philosophy:

- All frequent words should not be divided

- All rare words should be divided into smaller meaningful subwords

Most models which have obtained state-of-the-art results in the English language use some kind of subword tokenization algorithms. A few common subword based tokenization algorithms are WordPiece used by BERT and Bye-Pair Encoding used by GPT. I was planning on giving a detailed explanation of the inner workings of the Byte-Pair Encoding algorithm but this article is already getting too long for a LinkedIn post (I originally posted this article on linkedIn), so I will soon be posting a separate article focusing on that.

Conclusion

“Why do we even write conclusions? nobody reads them…” - Sun Tzu, the art of war